In 1872, Charles Darwin published The Expression of the Emotions in Man and Animals, in which he argued that mammals show emotion reliably in their faces. Since then, thousands of studies have confirmed the robustness of Darwin’s argument in many fields, including linguistics, semiotics, social psychology, and computer science. More interestingly, several studies, including those of renowned psychologist Paul Ekman, demonstrated that basic emotions are, indeed, universal. Affectiva, a Massachusetts Institute of Technology spinoff located in Waltham, Massachusetts, builds a variety of products that harness the two main characteristics of facial expressions—robustness and universality—to measure and analyze emotional responses.

As part of the “Entrepreneur’s Corner” series, I had the opportunity to speak with Affectiva’s cofounder and CEO, Rana el Kaliouby, about the opportunities that these products provide in areas such as mental health, mood tracking, and telemedicine as well as the challenges associated with achieving business success.

Entrepreneur’s Corner: Rana el Kaliouby

Rana el Kaliouby is the cofounder and CEO of Affectiva, which is pioneering emotion-aware technology, the next frontier of artificial intelligence. Prior to founding Affectiva, as a research scientist at the MIT Media Lab, el Kaliouby spearheaded the applications of emotion technology in a variety of fields, including mental health and autism research. Her work has appeared in numerous publications, including The New Yorker, Wired, Forbes, Fast Company, The Wall Street Journal, The New York Times, TIME, and Fortune, as well on CNN and CBS. A TED speaker, she was recognized by Entrepreneur as one of the “7 Most Powerful Women to Watch In 2014,” inducted into the Women in Engineering Hall of Fame, and listed on Ad Age’s “40 Under 40.” She also received the 2012 Technology Review Top 35 Innovators Under 35 Award and Smithsonian Magazine’s 2015 American Ingenuity Award for Technology.

IEEE Pulse: What is the problem that Affectiva set out to solve?

Rana el Kaliouby: Emotions matter. Our emotional and mental states influence every aspect of our lives, from how we connect and communicate with one another to how we learn and make decisions, as well as our overall health and well-being. However, in our increasingly digital world filled with hyperconnected devices, smart technology, and advanced artificial intelligence (AI) systems, emotion is missing. We are able to analyze and measure physical fitness, but we are not yet tracking emotions to get a complete understanding of our overall well-being. This hampers not only how we humans interact with technology but also how we communicate with each other as more of our communications take place online.

We also know from years of research that emotional intelligence is a crucial component of human intelligence. People who have a higher emotional quotient lead more successful professional and personal lives, are healthier, and even live longer. Technology that can read and respond to emotion can improve our overall health and well-being. We are giving machines the ability to sense and respond to human emotion, something that, of course, is very deeply human but that today’s technology has not been capable of doing. We like to say we are bringing AI to life!

My work during the past 16 years and, most recently, at Affectiva has been about bringing emotional intelligence to the digital world. Affectiva’s mission is to humanize our technology, and its Emotion AI platform will power these emotion- aware technologies and digital experiences. In the past, all of your emotional expressions in a digital context disappeared into oblivion. With Affectiva, it’s oblivion no longer. Our Emotion AI platform captures these emotion data points, and our emotion analytics [are] used to derive insights as well as design real-time experiences that adapt to human emotion in the moment.

IEEE Pulse: How does the technology work?

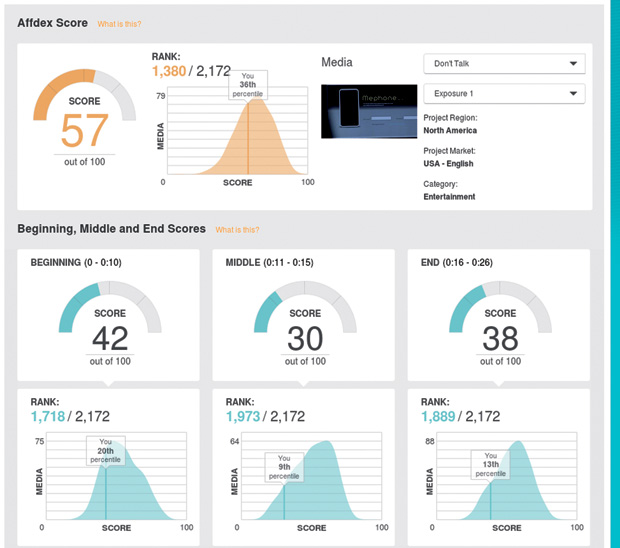

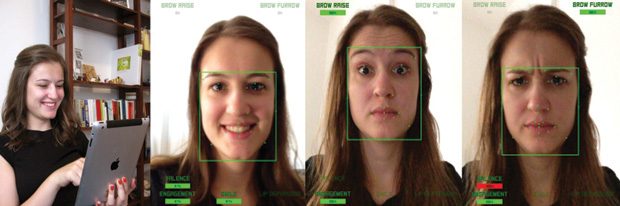

el Kaliouby: Affectiva’s facial coding and emotion analytics technology (Affdex) relies on computer vision to perform its highly accurate, scalable, and repeatable analysis of facial expressions (Figure 1). (Computer vision is the use of computer algorithms to understand the contents of images and video.) In the case of Affectiva, the computer is replacing the human that would watch for facial reactions (human-certified facialaction-coding-system coders). When consumers participate in an Affdex-enabled experience with a device, computer-vision algorithms watch and analyze the video feed generated from the webcam or any other optical sensor (Figure 2).

Our technology, like many computer-vision approaches, relies on machine learning. Machine learning refers to techniques in which algorithms learn from examples (training data). Rather than building specific rules that identify when a person is making a specific expression, we instead focus our attention on building intelligent algorithms that can learn (or be trained) to recognize expressions (Figure 3). At the core of machine learning are two primary components. The first is data: like any learning task, machines learn through examples and can learn better when they have access to massive amounts of data. The second is algorithms: how machines extract, condense, and use information learned from examples.

IEEE Pulse: But wouldn’t this approach require a huge amount of data?

el Kaliouby: Oh yes! Our emotion data repository is the world’s largest emotion database, with more than 4.8 million faces collected from people in 75 countries, amounting to approximately 56 billion emotion data points. (Here’s the math: we have collected 4.8 million face video sessions, and each video is, on average, 37.78 seconds long. We sample at 14 frames/second, and, for each frame, we log 22 emotion metrics—4,879,005 X 37,78 X 14 X 22 = 56,773,273,141, i.e., more than 56 billion emotion data points.) In contrast, in academia, an average facial emotion data set is only in the thousands and is [in] no way as diverse and ecologically valid as our data. We add 50 million emotion data points every day!

All of these data are collected with the individuals’ consent. Typically, as people are engaging with a digital experience (e.g., watching an online ad or playing a game), we ask if they are okay turning their camera on and sharing their emotions as they view this content. If we get their consent, these data then flow to our server, where they are processed and logged. If they do not consent, we never turn on the camera, and nothing happens. The emotion data are used 1) to power our deep-learning and machine-learning algorithms, 2) to feed into our norms and benchmarks, and 3) as input into our predictive analytics models so we can tie a person’s emotional state to his or her behavior.

IEEE Pulse: Tell us about some of the application areas in health care.

el Kaliouby: The applications are numerous, so I will talk about just a few of them. One area is mood tracing and mindfulness. Fitness devices and mindfulness programs have the potential to track and analyze our emotional state. This enables us to keep mood journals and, when aggregating emotion data with other data, understand what influences our emotion.

Another is mental health monitoring. Facial expressions can be indicators of mental health issues, such as depression, trauma, and anxiety. There are soft biomarkers of mental health disorders, such as depression expressed by the face, droopy eyelids, and dampened facial expressions. By digitally tracking the facial expressions of emotion between doctor visits, patients can flag if they deviate from their norm. This also allows for personalized therapy.

The technology could also be used for pain assessment. It is time for a more objective measure of pain, as naturally reflected in the face, than what is subjectively determined in the self-reported pain scale. The face is one of the most robust ways of communicating pain, often manifested in brow lowering, skin drawn tightly around the eyes, and a horizontally stretched open mouth with deepening of the nasolabial furrows. Objectively quantifying pain is particularly important in nonverbal populations, such as young children. Such a system can make routine observations of facial behavior that can be used in clinical settings as well as postops.

Telemedicine is another venue. Health care providers can get real-time feedback through video communication on the emotional state of patients, or patients can gather continuous, longitudinal emotion data to send to their health care providers. Also, empathy training can be used. High physician empathy has been linked to a decrease in malpractice claims. Higher empathy also leads to better communication, which leads to better outcomes. Emotion technology can be used in empathy training to measure not only how providers react to patients but also the timing of their reaction.

And finally, there are potential benefits for autism research. People on the autism spectrum often have difficulty reading emotions and regulating their own emotions. Emotion technology can enable devices to serve as aids in interpreting emotions and prompting behavioral cues. One of our partners, BrainPower, uses Google Glass to implement real-time gaming experiences that help individuals on the autism spectrum learn about facial expressions of emotion.

IEEE Pulse: Entrepreneurs often wonder how much of success is strategy and how much is execution and hard work. What is your take on this?

el Kaliouby: I firmly believe that there is no such thing as an overnight success, especially for ideas and/or startups that are pushing the state of the world and introducing a paradigm shift in how we experience technology. Strategy is critical of course, but execution and hard work are also necessary. I have learned that, to be successful, 1) you have to be the world’s expert at something, 2) perseverance is key (because, if it’s novel at all, many people will shoot your idea or you down!), and 3) passion is critical—it’s not going to be an easy ride, so you better really care about the mission. Basically, you need grit. And a lot of it. I love this saying, which applies to my approach: “People who think it can’t be done should not stand in the way of people who are doing it!”

IEEE Pulse: Another important issue that holds many entrepreneurs back is fear of failure. Where do you draw the line between healthy and unhealthy levels of fear of failure?

el Kaliouby: Confession: I definitely have a fear of failure. But you know what? I have failed before, and the key is to fail fast, rally, and then move on. At every round of funding, we were turned down by several investors, but we also managed to secure an amazing roster of top-tier investors, including Kleiner Perkins Caufield Byers, WPP, and Fenox Venture Capital. We pushed out products that didn’t stick, but we acknowledged that with “Okay! I guess that didn’t work, so let’s try something else.” There were so many stops along the journey where we could have given in, but we didn’t.

IEEE Pulse: You are the cofounder and CEO of the company. What’s your advice to potential technical founders with regard to both of these positions?

el Kaliouby: When we started Affectiva, my cofounder, Prof. Rosalind W. Picard, and I decided to hire seasoned business expertise to complement our academic expertise. I have learned so much from our two previous CEOs, and I am very grateful. Five years on, I know much more about how to run and grow a business, define a strategy, and create a road map and execute it. I’ve come a long way.

Ultimately, though, I think the founder has a vision and is the keeper of that vision. The founder is the believer, the evangelist. And it’s important that the founder is empowered to execute that vision and have a team of people aligned around it. Everyone— seasoned or not—will make mistakes along the way. I have found that founders, in particular, are willing to step outside of their comfort zones to make their vision happen. And that’s what is needed to succeed.

IEEE Pulse: Where do you think this whole area of emotion analysis and quantification is headed, and what is your vision for Affectiva?

el Kaliouby: Our vision is that, one day, all of our devices will have an emotion chip and will be able to read and respond to our emotions. We will interact with our technologies—be it our phones, cars, AI assistants, or even social robots—in the same way we interact with one another: with emotion! I think that, in three to five years, we will forget what it was like when our devices didn’t understand emotion. It’s similar to how we all assume that our phones today are location aware and have GPS chipsets in them. Someday soon, it will be the same for emotions.