“The future of medicine is in your smartphone,” proclaimed an eminent medical researcher in a 2015 Wall Street Journal essay. In a sense, that future has already arrived, judging from the proliferation of apps and medical devices now connected to smartphones. One 2015 industry study identified more than 165,000 health-related smartphone apps available from the Google Play Store and Apple iTunes. But to what extent does this technology lead to improved patient outcomes? That question is one for evidence-based medicine, to be answered by clinical trials and systematic reviews by medical experts.”

Current Reviews and Reports on Medical Apps

An important partial answer to this question came in mid-December 2016, when the Cochrane Collaboration, which conducts highly regarded systematic reviews of medical interventions of all sorts, released a major report on the use of automated telephone communications systems (ATCS) for preventing disease and managing chronic conditions. This massive 533-page report evaluated 132 clinical trials with more than 4 million participants performed according to the rigorous process that Cochrane uses for its assessments of other interventions.

The authors defined ATCS broadly as “a technology platform through which health professionals can collect relevant information or deliver decision support, goal setting, coaching, reminders, or health-related knowledge to consumers via smartphones, tablets, landlines, or mobile phones, using either telephones’ touch-tone keypad or voice recognition software.” (A related rubric is mHealth, which the World Health Organization defines as “medical and public health practice supported by mobile devices, such as mobile phones, patient monitoring devices, personal digital assistants, and other wireless devices.”) Thus, ATCS encompass a range of services, from simple telephone reminders of appointments to two-way communication between patients and providers that may include transmission of patient data to healthcare professionals. The review covered studies published between 1980 (when, presumably, some patients were still using rotary dial phones) through June 2015. However, a large fraction (perhaps most) of the papers examined in the Cochrane Review study involved the use of smartphones and can be considered forms of mHealth.

The Cochrane report had positive but also mixed conclusions. “Our results show that ATCS may improve health-related outcomes in some long-term health conditions,” remarked lead author Josip Car from the Lee Kong Chian School of Medicine, Nanyang Technological University, Singapore. But many studies reviewed by the project failed to show improvements in patient outcomes from the use of ATCS technology. Where improvements were observed, they were often modest (Table 1, below). Much of the evidence for effectiveness, the report concluded, was “moderate” or “poor” in quality due to limitations in the clinical studies.

[accordion title=”Table 1. A Capsule Summary of ATCS Surveyed by Cochrane Review (2016 December)”]

Outcomes of Apps for Preventative Care

| Probably does have a positive effect on: | Probably little effect on: |

|

|

Outcomes for Condition-Specific Apps

| Probably has positive outcomes in terms of: | Probably has little or no effect on outcomes related to: | Insufficient evidence related to: |

|

|

|

[/accordion]

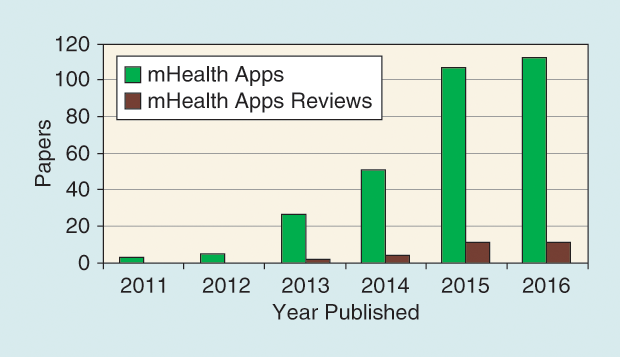

To some extent, deficiencies in the apps themselves may have led to their ineffectiveness. In late December 2016, we searched the Institute for Scientific Information Web of Science (now maintained by Clarivate Analytics) using the terms “smartphone and app and health.” The search results included 304 papers, most of which have appeared in the past two years (Figure 1). Roughly 20 of these papers were comprehensive reviews of smartphone apps in particular medical fields. Most of these reviews expressed optimism about the potential usefulness of apps and of mHealth in general, but most also noted many limitations in the apps (Table 2, below). Indeed, the weakness of the medical component of many health care-related apps is painfully obvious.

[accordion title=”Table 2. Seven comprehensive reviews of smartphone medical apps that appeared in 2016.”]

Except as noted, all of these apps are intended for use by consumers.

Category: Stress management (Coulon 2016)

902 apps screened and evaluated, of which 60 met inclusion criteria

Conclusion: “32 apps included both evidence-based content and exhibited no problems with usability or functionality”; “these apps have the potential to effectively supplement medical care”

Category: Weight loss app for Arabic speakers (Alnasser 2016)

298 apps screened and evaluated, of which 65 met inclusion criteria

Conclusion: “The median number of evidence-informed practices was 1, with no apps having more than 6 and only 9 apps including 4–6. These findings identify serious weaknesses in the currently available Arabic weight-loss apps.”

Category: Mental health (Radovic 2016)

208 apps reviewed (chiefly, symptom relief and symptom relief and general mental health education)

Conclusion: “Most app descriptions did not include information to substantiate stated effectiveness of the application and had no mention of privacy or security. Due to uncertainty of the helpfulness of readily available mental health applications, clinicians working with mental health patients should inquire about and provide guidance on application use, and patients should have access to ways to assess the potential utility of these applications.”

Category: Inflammatory bowel disease self-management (Con 2016)

238 apps screened and evaluated, of which 26 met inclusion criteria

Conclusion: “Apps may provide a useful adjunct to the management of [inflammatory bowel disease] patients. However, a majority of current apps suffer from a lack of professional medical involvement and limited coverage of international consensus guidelines.”

Category: Suicide prevention (Larsen 2016)

123 apps screened and evaluated, of which 49 met inclusion criteria

Conclusion: “All reviewed apps contained at least one strategy that was broadly consistent with the evidence base or best-practice guidelines. … Potentially harmful content, such as listing lethal access to means or encouraging risky behaviour in a crisis, was also identified.” “Clinicians should be wary in recommending apps, especially as potentially harmful content can be presented as helpful.”

Category: Weight management (Bardus 2016)

Review of 23 apps

Conclusion: “overall moderate quality … more attention to information quality and evidence-based content are warranted”

Category: Nutritional tracking for diabetics (Darby et al 2016)

11,000 apps screened and evaluated, of which 42 met inclusion criteria

Conclusion: “The apps considered in this review provide great potential for improving outcomes in patients with diabetes but also do include some limitations… In the future, actual testing of a subset of apps in patients with diabetes should be considered”

[/accordion]

Many of the apps were insufficiently informed by best practices that experts in health interventions have developed over the years and did not follow guidelines of evidence-based medicine. Some even lacked obvious measures to ensure validity. In their 2014 review of 107 apps for self-management of hypertension, Kumar et al. found that “none of these apps employed the use of a [blood pressure] cuff or had any documentation of validation against a gold standard.” One author considered mHealth to be “a strategic field without a solid scientific soul.”

Even worse, some of the apps are potentially dangerous. In a 2016 review of suicide prevention apps, Larsen et al. complained that some apps provided “potentially harmful content, such as listing lethal access to means [of suicide].” Many apps raised obvious privacy concerns for patients, and their purveyors can be suspected of selling patient data in some cases.

Detecting Atrial Fibrillation

But, clearly, mHealth offers unprecedented possibilities with far-reaching consequences by enabling long-term monitoring of patients for medical conditions, analyzing health data on smartphones held by the patient, and transmitting data to healthcare providers. Screening for “silent” atrial fibrillation (AF) is a case in point. Unlike ventricular fibrillation, which is lethal within minutes, AF is not immediately life-threatening; however, over time, it increases the risk of stroke and other events. Persistent AF is easy to diagnose with an electrocardiogram (ECG), but sporadic bouts of AF may be asymptomatic and remain undetected. Some experts estimate that about one quarter of AF cases are “silent” and presently undiagnosed (see “mHealth Screening for Atrial Fibrillation”).

[accordion title=”mHealth Screening for Atrial Fibrillation”]

The prospective benefits of screening (for any medical condition) to both the individual patient and the affected population, in terms of reduced burden of disease, depend on many factors. Two recent studies suggest scenarios for screening for atrial fibrillation (AF) that could have a substantial health impact.

The first is a 2016 study by Belgian investigator Lien Desteghe and his colleagues, who compared the performance of two hand-held ECG devices, Kardia Mobile (AliveCor) and MyDiagnostik (Applied Biomedical Systems, Maastricht, The Netherlands) against a standard 12-lead ECG examination. The study included 469 patients in the cardiology and geriatric wards of two Belgian hospitals. For the geriatric patients, the AliveCor device correctly identified approximately 80% of the individuals with AF, while confirming the absence of AF in virtually all of the patients who did not have that arrhythmia as determined by the gold standard ECG test. (The performance of the MyDiagnostik device was similar.)

While these investigators found the devices to be “suboptimal” in performance compared to the gold standard 12-lead ECG, they nevertheless found important benefits of the technology. Screening patients for AF with such devices is much less expensive than having a cardiologist read a standard 12-lead ECG, and it is more reliable than having a nurse check the patients’ pulses for abnormal rhythms. The researchers estimated that if a “structured screening strategy” were used (limiting screening to patients with no known AF who had no implanted cardiac devices), then new AF cases could be identified and strokes prevented in this population at comparatively low cost. Whether similar encouraging results would be found with other screening populations remains to be seen.

In a very different setting, last year, a team of investigators led by David McManus (University of Massachusetts) reported a study that screened residents of four villages in rural India with the AliveCor device. The investigators identified AF in 5% of the screened individuals, a prevalence similar to that in Western countries. This prevalence is much higher than previously accepted for India—evidently, most AF cases escape diagnosis in the Indian healthcare system. “Mobile technologies may help overcome resource limitations for atrial fibrillation screening in underserved and low-resource settings,” the authors conclude. The devices are inexpensive and can be used reliably by village healthcare workers with little formal medical training, both important benefits. Providing adequate follow-up care may be a challenge, though.

[/accordion]

In 2013, David McManus and his colleagues at the University of Massachusetts and Worcester Polytechnic Institute, Massachusetts, showed that a smartphone, with its camera placed against the skin, can measure pulse irregularities and reliably detect AF. Since then, six, albeit small, clinical studies have shown that smartphones can reliably detect AF. The apps measure pulse rate, either by using the phone’s camera to detect pulsatile changes in blood flow (photoplethysmography, the approach taken by McManus) or by recording single-channel ECGs via electrodes attached to the smartphone and using sophisticated algorithms to identify AF.

An example of this second approach is Kardia Mobile by AliveCor (Mountain View, California). This US$100 device is connected to the phone by a wireless link and is normally mounted to the back of the phone. When the user touches a pair of electrodes on the device, it generates a one-lead ECG signal that can be stored in the phone and viewed by the patient (after authorization by a physician) or transmitted to healthcare providers. The companion app provides an “instant analysis” that tells the patient if AF is present. In March 2016, AliveCor announced a wristband for an Apple Watch that had embedded conductors and is wirelessly linked to the watch. By touching the outer surface of the band, the user can capture one-lead ECGs and have the device indicate the presence of AF or normal heart rhythm.

The other apps that attempt to detect AF using only the phone, placing its camera against the skin, have had mixed success at best. Reviews by users of one app (Photo AFib Detector, available from both iTunes and the Google Play Store) noted that it failed to detect their AF. One reviewer complained that the app’s only means of sharing results is to post them on Facebook, which raises obvious privacy concerns. CCApp, the Hong Kong company that developed the app, provides no information about how it had been tested or its accuracy. The company has no apparent medical competence; its main product is an app that uses artificial intelligence to identify optimal locations of a house or office according to the traditional Chinese principles of feng shui.

Apart from smartphone apps, many new mHealth devices for cardiology are coming onto the market. Zio Patch (iRhythm Technologies) is a stand-alone device that attaches to the body and records a single-channel ECG for up to 14 days. Then, the patient mails the device to the company, which analyzes the data and prepares a report for the patient’s physician.

Using such devices to diagnose AF under physicians’ orders would pose few problems (assuming they work reliably). Doctors have been using Holter monitors to collect one or two days’ ECG data for diagnosis of arrhythmias since the early 1970s. The new elements are the sale of ECG-screening devices directly to the public, bypassing traditional channels of distribution of monitoring devices, and the push to use such devices to screen asymptomatic individuals for silent or asymptomatic AF.

Selling such devices directly to the public will create new pressures on the healthcare system. On its website (and in many ads on the Internet), AliveCor claims that a user can “relay [heart activity data] to your doctor to inform your diagnosis and treatment plan.” But a busy healthcare facility such as the Hospital of the University of Pennsylvania has thousands of cardiac care patients and is ill equipped to handle streams of data of variable quality sent to it by thousands of patients with wireless-enabled monitoring devices. Mechanisms must be established to compensate healthcare facilities for the additional staff time needed to manage and interpret these data.

Despite occasional reports of incorrect detection of AF by the AliveCor device, it appears to be generally reliable. Such wearable devices can improve patients’ awareness of heart health and facilitate treatment by their doctors. However, the benefits of screening of asymptotic individuals for AF, which is facilitated by mHealth devices, remain unclear, particularly for individuals suffering rare bouts of AF.

“How much atrial fibrillation constitutes a mandate for therapy?” asks Kirchof et al. in one 2016 set of evidence-based guidelines for management of AF. A diagnosis of AF will lead to anticoagulant therapy, which has its own set of risks to the patient, and it will increase the patient’s utilization of medical services, with potentially significant economic consequences.

Insurance giant Aetna currently covers the use of long-term monitoring with the iRhythm Technologies and other devices to diagnose patients with unexplained symptoms suggesting cardiac arrhythmias—as it has long done with Holter monitoring. Aetna presently does not cover use of AliveCor and several other devices “because their clinical value has not been established.” Nor does it cover the costs of screening asymptomatic individuals for AF in the absence of clinical proof that such screening actually improves patient outcomes. Needless to say, obtaining such proof is far harder than simply showing that the devices can reliably detect AF.

To assess the effectiveness of new mHealth technologies for early detection of AF, a large randomized clinical trial, the mHealth Screening to Prevent Stroke Trial, is currently in progress. Sponsored by the Scripps Translational Science Institute, the trial is being carried out in collaboration with Aetna. The study will match 2,100 patients at high risk, but not previously diagnosed with AF, against 4,000 controls. The monitored subjects are being provided with a wearable ECG recording patch (the iRhythm Zio patch) or with a wristband device developed by Amiigo (North Salt Lake, Utah) combined with a proprietary app that detects AF. The investigators will follow patients for three years to compare rates of AF diagnosis, incidence of stroke, and other consequences of AF, as well as differences in healthcare use and costs. The comparison population will be unscreened individuals using claims data from Aetna. The study began in November 2015 and is expected to conclude in September 2019.

What is to Be Done?

The reviews we examined frequently called for more regulation of medical apps. The U.S. Food and Drug Administration (FDA) considers an app to be a medical device if it meets the statutory definition of “medical device” (broadly, something that is used to diagnose or treat a disease) and hence subject to FDA premarket-approval requirements. The AliveCor and iRhythm Zio patch both have FDA clearance as Class 2 devices, on the grounds that they are functionally equivalent to other established products (electrocardiographs and data-recording devices, respectively).

But many smartphone medical apps are not subject to FDA regulation because they do not meet its definition of medical devices. This group includes many apps intended to help patients manage chronic illnesses, such as those covered in the recent Cochrane Review. Some of these may pose significant risks to patients, raise privacy concerns, and/or be poorly designed or difficult for patients to use effectively. Under the new Trump administration, the FDA may well have little appetite for increasing its regulation of this technology.

App-rating systems, such as the recently proposed Mobile Application Rating Scale (or MARS) system, can help raise the quality of apps by enforcing standards of usability and information quality. However, this does not address the major question: How much do the apps improve patient outcomes?

For their part, healthcare providers and insurance companies can publicize high-quality apps. For example, insurance giant Humana lists on its website “five great healthcare apps.” But even recommended apps may still lack substantial clinical evidence for effectiveness. That kind of evidence is simply too expensive to obtain. One would have to sell many US$5.00 apps to be able to support even simple clinical trials, let alone conduct the much more extensive trials needed to establish improvements in patient outcomes.

In important ways, smartphone technology for health care remains at what one firm (Gartner, Stamford, Connecticut) calls the “hype” stage of innovation, with excessive optimism by many people about the wonderful things a new technology can accomplish. With time, Gartner explains, the initial enthusiasm gives way to disillusionment as the limits of the technology become apparent. That is clearly happening now, with glowing promises about the use of smartphones in health care (such as the quotation with which we opened this article) being followed by numerous less-than-stellar evaluations of its medical effectiveness. Finally, as people gain more experience with the technology, they develop more realistic expectations about what it can accomplish, and durable success stories can emerge. This may well happen with mHealth technology to screen for silent AF, for example, but we will have to wait for the conclusion of a lengthy and expensive clinical trial to know for certain.

Works Consulted

- P. Posadzki, N. Mastellos, R. Ryan, L. H. Gunn, L. M. Felix, Y. Pappas, M.-P. Gagnon, S. A. Julious, L. Xiang, B. Oldenburg, and J. Car. (2016, Dec.). Automated telephone communication systems for preventive healthcare and management of long-term conditions. Cochrane Review. [Online].

- A. Alnasser, et al., “Development of ‘Twazon’: An Arabic App for Weight Loss,” JMIR Res. Protocols, vol. 5, no. 2, p. e76, 2016.

- M. Bardus, et al., “A review and content analysis of engagement, functionality, aesthetics, information quality, and change techniques in the most popular commercial apps for weight management,” Int. J. Behav. Nutr. Phys. Act., vol. 13, no. 1 , p. 35, 2016.

- D. Con and P. De Cruz, “Mobile phone apps for inflammatory bowel disease self-management: a systematic assessment of content and tools,” JMIR mHealth and uHealth, vol. 4, no. 1, 2016.

- S. M. Coulon, C. M. Monroe, and D. S. West, “A systematic, multi-domain review of mobile smartphone apps for evidence-based stress management,” Amer. J. Prev. Med., vol. 51, no. 1, pp. 95–105, 2016.

- A. Darby et al., “A review of nutritional tracking mobile applications for diabetes patient use,” Diabet. Tech. & Ther., vol. 18, no.3, pp. 200–212, 2016.

- R. de la Vega and J. Miró, “mHealth: A strategic field without a solid scientific soul. A systematic review of pain-related apps,” PLoS One, vol. 9, no. 7, p. e101312, July 2014.

- L. Desteghe et al., “Performance of handheld electrocardiogram devices to detect atrial fibrillation in a cardiology and geriatric ward setting,” EP Europace, vol. 19, no. 1, pp. 29–39, 2016.

- N. Kumar, M. Khunger, A. Gupta, and N. Garg, “A content analysis of smartphone–based applications for hypertension management,” J. Amer. Soc. Hypertens., vol. 9, no. 2, pp. 130–136, 2015.

- M. E. Larsen, J. Nicholas, and H. Christensen, “A systematic assessment of smartphone tools for suicide prevention,” PLoS One, vol. 11, p. e0152285, Apr. 2016.

- D. D. McManus et al., “A novel application for the detection of an irregular pulse using an iPhone 4S in patients with atrial fibrillation,” Heart Rhythm, vol. 10, no. 3, pp. 315–319, 2013.

- A. Radovic et al., “Smartphone applications for mental health,” Cyberpsych., Behav., Soc. Network, vol. 7, pp. 465–470, 2016.

- A. Soni et al., “High burden of unrecognized atrial fibrillation in rural India: an innovative community-based cross-sectional screening program,” JMIR Pub. Health Surv., vol. 2, no. 2, 2016.

- J. S. Taggar et al., “Accuracy of methods for detecting an irregular pulse and suspected atrial fibrillation: a systematic review and meta-analysis,” Eur. J. Prev. Card., vol. 23, no.12, pp. 1330–1338, 2016.