Genomic research is the driver of efforts to create personalized medicine and discover cures for cancer, rare diseases, and other serious health conditions. Thanks to the widespread availability of inexpensive genome sequencing technology, the field is expanding rapidly. Many countries plan to sequence significant proportions of their population, and individuals are using genome sequences to discover more about themselves. The (no doubt already sequenced) elephant in the room is that each whole genome produces a data file of around 100 GB. If the demand for genome sequences continues at its current rate, genomic sequencing could be producing 40 exabytes of data per year by 2025 [1]. for genome sequences continues at its current rate, genomic sequencing could be producing 40 exabytes of data per year by 2025 [1].

Technology of Data Storage

Genomic data present unique challenges. The files are extremely large; they are considered sensitive, personal information; and they need to be maintained in a manner that supports continuous discovery and then retained to meet regulatory compliance requirements. Thus, we can expect genomic data to be kept in a secure, always available storage tier. This has previously been on-premise storage systems.

With many research institutions and commercial players still handling data sets that total terabytes, current on-premise and cloud storage arrangements are just able to cope. However, the demand for genome sequences is overwhelming these systems in many organizations. The elastic nature of storage and compute in the cloud makes it an attractive option, but moving large data sets to the cloud is time-consuming. Transfers can take many days to complete. When using the cloud, the time to move files from storage to compute instances to actually analyze the data also creates a bottleneck.

One further consideration is critical. Security is a vital component with genomic data. Although much of the data used in research is anonymized, any data breaches would lead to significant reputational damage. For companies offering sequencing to consumers the link between the individual and their data is more direct, making security an even greater concern. It’s tempting to feel comforted by the physical presence of on-premise storage controlled by known procedures, compared to the out-of-sight nature of the cloud, especially if cloud use requires crossing of jurisdictional boundaries. However, the security models of cloud vendors are best practice.

Economics of Data Storage

Large-scale genomics research has been made possible by the dramatic reduction in sequencing costs. In fact, the cost of a megabase of sequence has dropped over a million-fold in the last 20 years and the cost to sequence a human genome is now routinely less than US$1,000. Similarly, computing costs per GFLOP have also plummeted by a factor of approximately 100,000. However, in the same time period, the cost of a GB of disk storage has dropped by only 500×. Recently, cloud storage costs appear to have a hit a plateau—there have been no significant reductions in cloud storage prices of the major vendors for the past two years.

Sequencing technology has capacity for further price reductions. The shift from CPUs to GPUs and field-programmable gate arrays will drive down compute costs. In contrast, the demand for faster SSD storage is likely to drive the price for data storage higher. This combination of factors leads to data storage becoming the largest drain on a genomics project’s budget.

As research projects and sequencing companies grow, the question of how to manage older data becomes pertinent. At the moment, processing sample data for case analysis and reporting requires a “hot tier” data persistence infrastructure. In the future, aggregation and analysis of cohort data will become important for conducting research on larger sample populations and, in the case of sequencing companies, developing secondary data products. Cultivating these data requires long-term, but accessible (warm-tier), storage.

Computational reproducibility is also another key concern, as changes in software often affect the results of analyses and introduce biases that affect research and clinical testing. This means that not only must older data be retained, but also the particular software toolchain that was used to analyze it. Containerization of toolchains such as with Docker is already a popular means for preserving reproducibility. However, this means that genomic data must be preserved in a form that is still accessible to these older tools.

Faced with this challenge, data stewards and infrastructure managers express concern about their ability to scale data storage operations. The lifecycle of genomic data is becoming faster than the procurement cycle to buy, install, and integrate on-premise storage hardware. Cloud storage is becoming the de-facto option for scale-out data storage.

Data Storage in the Analysis Workflow

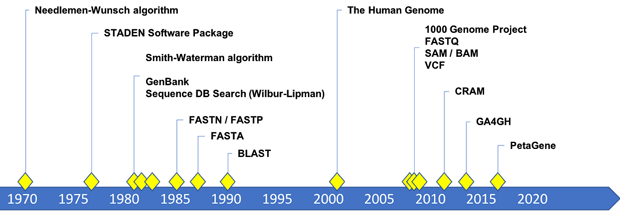

The primary body for setting international genomic standards is the Global Alliance for Genomics and Health (GA4GH), which has more than 500 member organizations from over 70 countries. Their BAM, FASTQ, and VCF file formats are universally adopted across industry and academia.

There is a canonical workflow for the analysis of genome sequences that has been adopted widely within the bioinformatics community. It begins with primary analysis which involves mapping and aligning reads in FASTQ data sets to a reference genome. The alignments are stored in a BAM file which undergoes secondary analysis to discover variations and produce a VCF file. A clinical report will be generated from the VCF file and verified with the BAM data by an analyst. These data are then aggregated for larger scale studies and population-scale analysis to progress our understanding of genomic variation and traits such as disease, or response to treatments.

The fact that genomic data is useful for individual case analysis, and large population analyses, drives the need to maintain these data in an alwaysavailable state. This presents a challenge since both the FASTQ.gz file and the BAM file for each whole genome are about 100 GB. Smart management of these data will become an important factor to the proper stewardship of genomic information.

Overall, the genomic research workflow is experiencing strain. As more genomes are sequenced and more is invested to discover breakthrough medical advances, the time pressure to analyze data and produce results is increasing. Collaboration between teams in a single organization or across institutions can help alleviate this. But, large data files make the sharing or moving of data to achieve it timeconsuming. Current file sizes and their associated problems are a barrier to the speed and computational efficiency that scaling research requires.

Where Does Compression Fit In?

Data compression has an important role in genomic data management. Reducing the storage space required without losing any information lowers the cost of storing genomic data without sacrificing any scientific value. Smaller files reduce the bandwidth necessary to move and share data, while moving more effectively through I/O bottlenecks to speed up the processing of data. FASTQ. gz and BAM data files already use GZIP compression to reduce the bulk of these data files. However, general purpose compression, like GZIP, does not provide optimal compression of genomic data and there is a considerable amount of storage space being wasted. Adoption of newer, optimized compression technologies will reduce this waste. Smarter compression will reduce storage demands without new formats that could require re-tooling of the technical infrastructure of the genomic data analysis.

What Compression Options are Available?

As mentioned above, general-purpose compression techniques have been applied to genomic data. The DEFLATE algorithm, in the format of GZIP, is commonly applied to FASTQ files and used to create BAM files from the basic SAM file format. It has the benefit of being lossless, so it preserves all the data. It is also free to use and open source. Yet, being a generic method it does not take advantage of specific redundancies in genomic data. This means that the compression ratios achieved are suboptimal.

There are several published algorithms purpose-built for genomic data compression. Some of these focus on the optimal compression of the sequence data alone; others focus on lossy compression of quality scores, and associated metadata. Optimized lossless compression tools for both FASTQ and BAM data files are less common.

GA4GH specify a reference-based compressed file format called CRAM which improves on BAM with higher compression ratios, but requires a reference genome for compression and decompression of data to be possible. CRAM is now supported by key software toolchains; however, it is not as universally supported as BAM is. After compressing a BAM file to CRAM, it is also generally not possible to recover the original BAM file, and in certain cases fields from the original BAM file are not correctly preserved by CRAM compression, affecting computational reproducibility.

Given this environment, it would seem that scope exists for a lossless commercial alternative that works with current storage technologies and with existing and legacy software toolchains. A genomic data software company, PetaGene, offers a commercially supported tool called PetaSuite for lossless compression of FASTQ and BAM files, optimized for genomic data and without needing a reference (Figure 1). PetaSuite also conforms with community standards, as laid down by the GA4GH. Native and automatic integration with existing and future analysis tools—and with local and cloud storage without the need for copying data between storage and compute instances—ensures that PetaSuite can be part of the current workflow for researchers and sequencing companies.

Opportunities Offered by Use of Smart compression

If genomics research is to deliver on its promise, using smart and efficient data compression will be the key to unlocking that potential. Reducing the waste in genomic data storage with optimized, lossless compression, along with the associated cost savings, means the ability to repurpose budgets for higher value operations. By using compression to reduce the time taken to get data to and from storage, greater collaboration is possible. Analysis can also be quicker thanks to smaller files lowering I/O demands. And, if the compressed data conforms to established standards, there will be no need to devote precious resources to re-working the analysis tools already in use. All of these should add up to achieving better results, more quickly.

Genomics is already producing enough data to rival the likes of data behemoths such as YouTube and Twitter. Rightly, that is not likely to change. So, smart compression techniques that are specifically designed for genomic data and its workflows will be crucial in bringing personalized medical innovations to everyone.

Reference

- Z. D. Stephens et al. “Big data: Astronomical or genomical?” PLoS Biol., vol. 13, no. 7, p. e1002195, 2015.